It’s known as the overview effect, Re/Cappers. It’s a deeply reflective state, often experienced by astronauts when they look down at that precious celestial orb we call home, from far above it.

Now the thing about space is, 1) no one can hear you scream (IYKYK), because 2) it be MAD dangerous. That’s why strides like our lead, LiDAR-laden Re/Cap are so enthralling. It’s about earth observation & capture. High-res 3D mapping. Advanced payloads. Remote sensing.

But if Earth is the center of the universe, one veteran was the center of observing it, through surveys, overlapping imagery, and implacable curiosity. Meet U.S. Army Captain Albert W. Stevens, 1886-1949, a Maine-born engineer turned Army Air Corps innovator who embodied the audacious spirit of early aerial capture.

His remote genesis was the fog and fury of World War I, where he wielded oblique aerial shots and infrared-sensitive film to pull order from chaos. Stevens doubtfully knew it at the time, but his overlapping ways in aerial photography were advancing photogrammetry and remote sensing decades before the terms became mainstays.

To think Stevens would be sated with mere perspective would have been laughable; he wanted the horizon behind the horizon. After The Great War, peacetime offered no pause. He conducted aerial photography surveys of the U.S. throughout the ‘20s. Then, from low-orbiting biplanes, he leapt to the stratosphere in search of new views, new truths…and gave us all a new meaning of Earth observation.

1930. The Great Depression has humanity in a vice grip, leaving it thirsting for anything resembling delight. Stevens, aloft over Argentina, captured the first photograph to show the curvature of the Earth. Two years later, he snapped the Moon’s shadow racing across the Earth during a solar eclipse. Then, this d-airdevil aerially mapped Maine, and snapped a single photograph from 23,000 feet that captured Mt. Shasta from 331 miles away, encompassing 7,200 square miles in a single, stunning negative.

Next was equal parts science/spectacle/Evel Knievel. Twice in the mid-1930s, Stevens and his Army colleagues ascended into the thin blue via sealed balloons, Explorer I and Explorer II. This excursion was sponsored by the National Geographic Society and watched breathlessly by a public increasingly enchanted by skyward ambition. The first flight ended in disaster-tinged drama, the gondola plummeting nearly 15 miles after the balloon ruptured; all three men parachuted to safety, their exploits front-page news. Not to be deterred, Stevens returned the following year, crewing Explorer II with Captain Orvil Anderson. Together, they soared to a record 72,395 feet, snapping photographs that for the first time revealed the boundary between the troposphere and the stratosphere.

Stevens was not Earth observation’s inventor, per se. But the good Captain darn sure helped industrialize it. While photogrammetry technically existed decades before his Maine boots touched a balloon basket, Stevens parlayed fragmented imaging into systematic doctrine. His overlapping aerial surveys were the methodological DNA that would permeate every subsequent Earth observation mission. His stratospheric boundary documentation was remote sensing in its purest form.

Stevens died in 1949, 23 years before the multispectral scanner-wielding Landsat 1. Every LiDAR pulse, advanced payload, and hyperspectral scan of Earth's changing surface carries forward the systematic rigor he pioneered.

He captured the Earth, sure. But most vitally, he taught us how to see it. And today, as we’ll soon cover, we’re still broadening his horizons…in 3D.

1936’s view from the highest point above Earth ever recorded. Image credit National Geographic viaGeographicus

What’s Cappenin’ This Week

Quick ‘Caps

At this point, NUVIEW is OLDNEWS when it comes to getting dough from the DoD. But the most recent check cut is an eye-popper, and a testament to the power of LiDAR for mapping & observing **checks notes** all of Earth.

A rendering of Nuview’s LiDAR Satellite. Image credit Space Flight Laboratory

$5 million, folks, to build what amounts to Earth's most sophisticated orbital scanning system; a constellation of space-based LiDAR satellites delivering continuous, high-res 3D mapping of the entire planet. NUVIEW CEO Clint Graumann positions this as America's strategic response to foreign LiDAR dependencies. The funding enables NUVIEW to advance payloads that address national security priorities while opening new commercial markets for global 3D data.

NUVIEW’S NUPAYLOADS will serve civil agencies, defense orgs, and commercial enterprises worldwide. The broader play: establish American dominance in orbital 3D mapping while building commercial infrastructure that redefines how governments, militaries, and corporations understand planetary geometry. Pulse 2.0 has their finger on it, linked below.

If Arthur C. Clarke was right that sufficiently advanced technology is indistinguishable from magic, then these Princeton researchers have just enrolled at Hogwarts Graduate Program.

Their "Reality Promises" project turns invisible robots into your personal pack mules while you wave around in VR like an amphetamined-up conductor. They've trained 3D Gaussian splats using an iPhone 14 Pro and some elbow grease to create digital twins of workspaces. These splats (capped at a modest 25k for scenes and 3k-5k for objects) render environments so convincingly that when you virtually summon a tube of Pringles, a hidden Stretch 3 robot scurries about to manifest your digital desires into crunchy reality. The pièce de résistance involves a digital bee that appears to teleport snack foods through space-time, when really it's just a hide-and-seek-loving robot.

The formal paper will be presented at the ACM Symposium on User Interface Software and Technology. Check out the jaw-droppin’ footage below, or Wonderful Engineering’s analysis of it: what headset, Jawset Postshot, the biggest current limitation, the plan for boosted splat counts, achieving full invisibility, perfecting color blending, telepresence & entertainment.

Moby-Dick’s Captain Ahab chased one white whale across endless seas with harpoons and obsession. But these modern whale seekers traded in weapons for tech, and vengeance for protection.

The researchers hail from the New England Aquarium, which has been an exemplar of whale research for 40 years. Their custom drone-grammetry tracks dwindling whale populations, measuring body condition and health in critically-endangered North Atlantic right whales, and killer whales in Alaska.

Sea rotors > sea legs. Image credit Véronique LaCapra, Woods Hole Oceanographic Institution

Dr. John Durban leads the Anderson Cabot Center's photogrammetry program, combining high-res aerial imaging with decades of population data. This yields non-invasive health assessments, and informs why whale numbers are declining.

Durban's team is transitioning from boat-based drone operations in Cape Cod Bay, to outfitting research aircraft with specialized cameras. This upgrade extends photogrammetry capabilities beyond coastal waters and enables year-round data collection when boat operations are more The Perfect Storm than the perfect scan.

Marine Technology News’ write-up below gets to the North Gulf Oceanic Society, the aerial survey team & oceanographic collaborations, how fishing gear entanglements and climate change relate, feeding areas, and causes for pessimism & optimism.

If you’re reading this newsletter, we need not preach the glory of built environment innovation.

But what about our own internal environment? After all, were we to prolong our fleshy shelf life, wouldn’t that just mean more days to bolster that built environment?

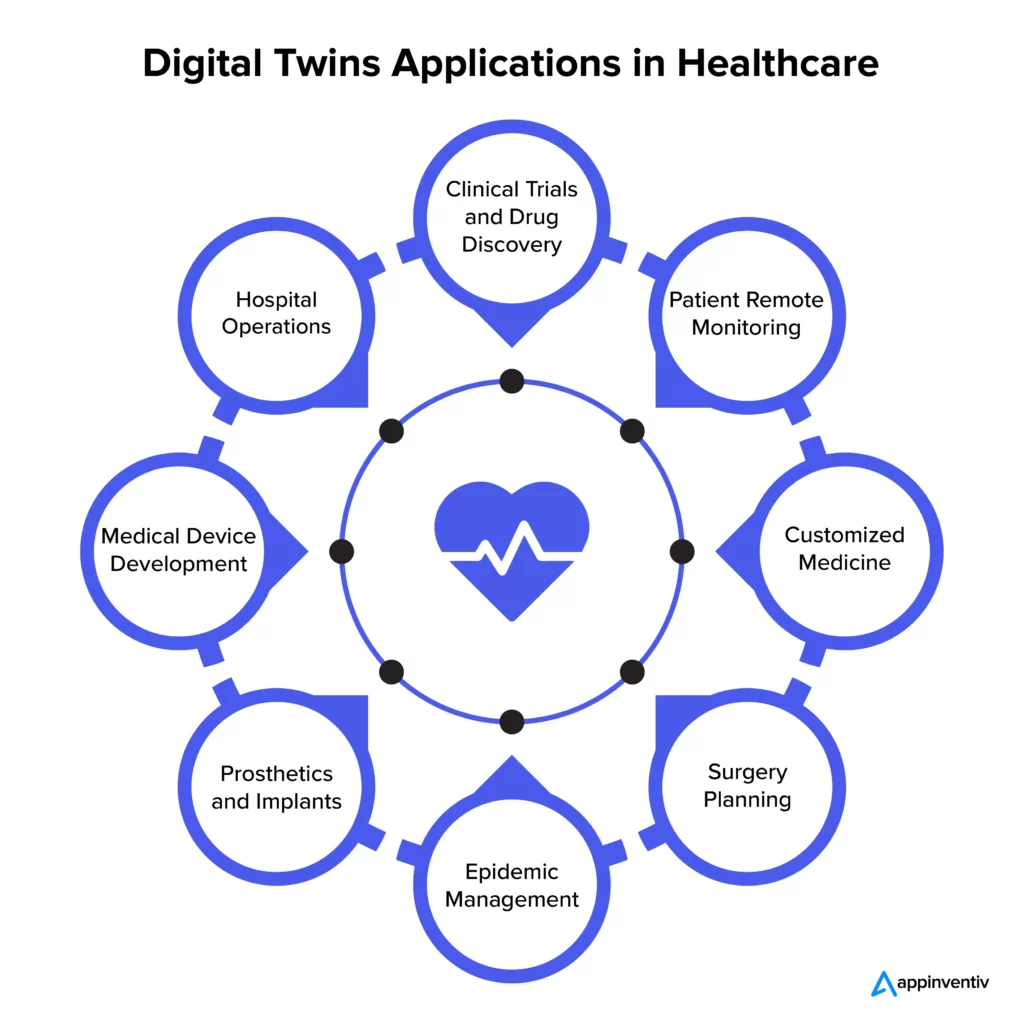

Appinventiv sure thinks so, having recently published a sweeping, case-study rich column, “Digital Twins in Healthcare.”

Soooo, 2026 for filling out paperwork with info you've already provided? Image credit appinventiv

It traces how virtual replicas of physical systems are overhauling patient care. The market is exploding, projected to hit $21.1 billion by 2028 with nearly 60% annual growth. Johns Hopkins just landed the first FDA-approved virtual heart model, letting doctors simulate cardiac behavior and personalize treatments with bewildering precision.

So we got surgeons virtually rehearsing procedures, AI models optimizing COVID-19 responses, and drug discovery simulations that could slash development timelines. Real results are already flowing; through a digital twin of its radiology department, Dublin's Mater Hospital drastically shrunk patient wait times while boosting scan capacity by over 25%.

Of course, challenges are legion, articulated below between a sea of case studies, and rundowns of key technologies.

Melbourne’s West Gate Bridge Collapse of 1970. Image credit Public Records Office of Victoria

Some engineering failures leave indelible marks: a jagged interruption in a city’s skyline, the ache of loss within a community, and lessons that ripple far beyond the rubble. Melbourne’s West Gate Bridge disaster provided all three, en route to becoming Australia’s worst-ever industrial accident.

Construction was two years in when tragedy struck on October 15, 1970. It began as workers sought to correct a camber discrepancy - an 11.4 cm height difference between two half-girders - that threatened the integrity of the steel box girder structure. Site engineers resorted to stacking ten massive 8-ton concrete blocks atop the higher section of the span, inadvertently causing the girder to buckle, a sign of increasing structural failure.

Desperate to flatten the bulge, an order was issued to remove over thirty bolts from a critical splice in the upper flange. Witnesses recalled the steel turning blue with stress and bolts snapping “like gunfire” as the structure groaned ominously, punctuated by a symphony of pinging sounds. Moments later, the fully loaded span violently snapped back and plummeted 50 meters into the Yarra River mud below, unleashing 2,000 tons of steel and concrete in a hellish explosion of diesel fire, twisted girders, and flying debris.

More than sixty men were either inside or atop the span, or taking lunch in a row of huts directly beneath. The crude force of the collapse eventually took 35 lives. Eighteen were wounded, some critically, with many more suffering trauma that lingered for years.

The Royal Commission, established immediately, examined 52 witnesses and 319 exhibits over 73 days. The final report revealed not only technical flaws in the bridge’s design and construction methods, but also damning deficiencies in supervision, management, communication, and decision-making. The disaster was “utterly unnecessary,” caused not by nature or untested materials but by miscalculations, errors of judgment, and institutional failures at multiple levels. No single organization was exonerated; material suppliers were found blameless, but everyone else from designers to contractors, labor to authority, shared responsibility.

Had the modern reality capture arsenal existed, a West Gate disaster aversion could have gone from improbable to probable. And given the ongoing prevalence of similar bridge woes, that knowledge is gold.

The fatal 11.4 cm camber discrepancy in the half-girders may have been identified and addressed with precise context before any physical correction was attempted. 3D scanning and photogrammetry could have recorded the exact as-built state of every girder segment immediately after placement, revealing the camber misalignment and early enough for engineers to explore safer corrective strategies. Instead of relying on visual judgment and manual measurements, a comprehensive digital record would have made every geometric deviation unmistakably clear.

Real-time structural health monitoring could have tracked stresses in the girders as crews incrementally loaded the span and initiated adjustments. Observable buckling, would have been flagged as a high-risk condition well before bolts were removed. These alerts could have paused work and brought in additional expertise to avoid hastily applied, unsafe remediation.

Robotic inspection systems, like the recent 2025 American Society of Civil Engineers award winner, could have further upped safety. Utilizing a trio of drones equipped with infrared cameras, LiDAR, and crack probes, robots can fly, crawl on girders, and deploy miniature crawlers to conduct detailed inspections of steel and concrete components - tasks that would have enabled early detection of structural anomalies and damage without risking worker safety.

Today, bridge construction & maintenance still grapple with your camber corrections, joint integrity, and fatigue cracks. Yet each carries risk that reality capture can reduce through precise geometry verification, early damage detection, and thorough project communication.

Thirty-five lives were lost to West Gate, from what we didn't know. Today, there's no excuse for not knowing.

By subscribing, you are agreeing to RCN’s Terms and Conditions of Use. To learn how RCN collects, uses, shares, and protects your personal data, please see RCN’s Privacy Policy.

Reality Capture Network • Copyright 2025 • All rights reserved